Rethinking metadata in the modern data stack

As the modern data stack continues to evolve, metadata is no longer a side effect of data projects development, it's a strategic asset. In a world driven by concepts like data mesh, governance-as-code, and platform engineering, having visibility into metadata isn't just useful, it's foundational.

Yet traditional metadata catalogs are often static, centralized, and disconnected from the systems they’re meant to describe. They quickly fall out of sync, require complex integrations, and rarely empower the engineers closest to the data.

This post explores a lightweight, open-source solution that flips that model on its head using Apache Hop and DuckDB to build pipelines that can audit, validate, and explain themselves. It’s a practical approach to metadata observability using nothing but project files and embedded SQL.

What is DuckDB and why should you care?

DuckDB is an embedded, in-process analytics database purpose-built for fast OLAP (Online Analytical Processing) workflows. Unlike traditional databases that require a dedicated server or service layer, DuckDB runs entirely within your application or script, no infrastructure, no setup, just blazing-fast analytics.

If you've used SQLite for transactional workloads, think of DuckDB as its analytical counterpart, optimized for columnar data access, complex aggregations, joins, filtering, and working with large local datasets like CSV, Parquet, or JSON.

Why DuckDB pairs so well with Apache Hop:

- Embedded by design: No need to run a separate server, DuckDB works right inside your local Hop installation or pipeline runtime.

- Columnar execution engine: Built for speed, especially on wide tables and large data volumes. It’s ideal for metadata analytics and batch profiling.

- Rich SQL syntax: Supports a wide range of ANSI SQL, including window functions, CTEs, JSON operators, and more, perfect for complex pipelines in Apache Hop.

- Built-in support for common file formats: Read from and write to formats like CSV, Parquet, JSON, and more, no connectors or staging needed.

- Extension ecosystem: With extensions like HTTPFS, Excel, Spatial, and PostGIS, DuckDB brings external data directly into your query logic.

The challenge

Today's data teams need more than runtime monitoring or logging. They need their data projects to be:

- Introspective: able to analyze their own structure and configuration

- Transparent: exposing gaps in testing, documentation, or schema coverage

- Actionable: supporting governance and quality checks directly in development workflows

How do you achieve that without relying on heavyweight catalogs or third-party governance platforms?

This approach: DuckDB + Apache Hop

By combining Apache Hop’s declarative, visual orchestration model with DuckDB’s embedded SQL engine, you can turn a project’s own metadata into a fully queryable audit layer, without introducing new infrastructure.

What’s in the stack

- Apache Hop provides a low-code interface to define ETL workflows and pipelines as JSON.

- DuckDB can read and query those JSON files directly, using read_json_auto() to infer schemas on the fly.

- Together, they enable pipelines that not only move data, but inspect, validate, and document themselves.

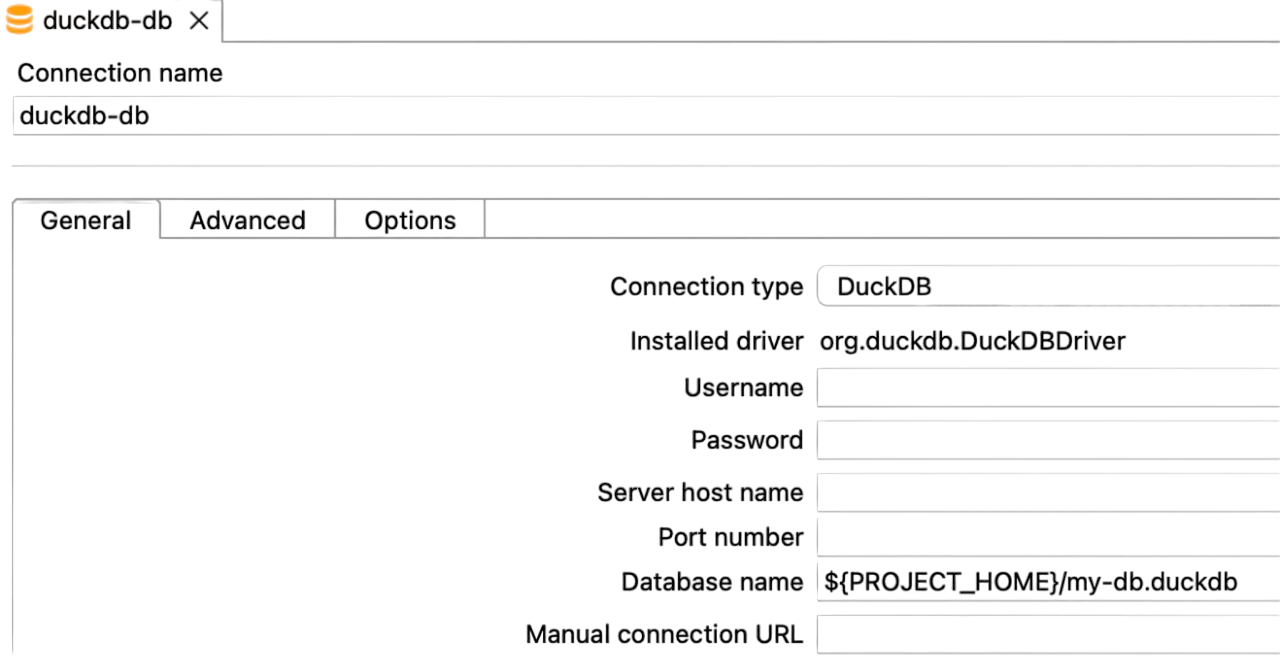

Setting up a DuckDB connection in Apache Hop is fast and frictionless, thanks to DuckDB’s embedded, zero-config design. All you need is a local DuckDB installation and a .duckdb file on your filesystem. In Apache Hop, simply create a new database connection, set the type to DuckDB, and point to your file. That’s it. This plug-and-play setup makes DuckDB ideal for local development, lightweight metadata analysis, and embedding powerful SQL logic directly into your pipelines.

Example 1: Metadata self-audit pipeline

Apache Hop stores all metadata as JSON. These files are the blueprint of your platform. By querying them with DuckDB, you can surface insights that would otherwise require a dedicated catalog or governance tool.

This query reads all JSON files recursively from a directory (and its subdirectories), automatically infers their structure, and returns the result as a single unified table, even if the JSON files don’t all follow the same schema.

SELECT *

FROM read_json_auto(

'/Users/user/Downloads/hop/config/projects/samples/**/*.json',

union_by_name=true,

ignore_errors=true

);

Breakdown:

Component | Explanation |

read_json_auto(...) | DuckDB function that reads JSON files and automatically infers column structure (schema) based on the contents. |

'/Users/user/Downloads/hop/config/projects/samples/**/*.json' | A file path with a glob pattern (**/*.json) that recursively includes all .json files in this directory and all its subfolders. |

union_by_name=true | Ensures that when combining multiple files with different schemas, DuckDB matches columns by name instead of position. Missing columns will appear as NULL. |

ignore_errors=true | Tells DuckDB to skip files that can’t be parsed or have structural issues, instead of failing the whole query. Useful for large and varied metadata sets. |

You can read this data directly using an Input Table transform in Apache Hop with a configured DuckDB connection.

From there, you can apply rules to check:

- Which datasets lack field-level schema definitions?

- What components are undocumented?

- Identify missing environment variables

- Detect config drift between environments (dev/test/prod)

- Flag insecure patterns like hardcoded credentials

And these are just a few examples of what’s possible.

All of this happens in a single .hpl Hop pipeline, no external services, no cloud dependencies, and no custom glue code.

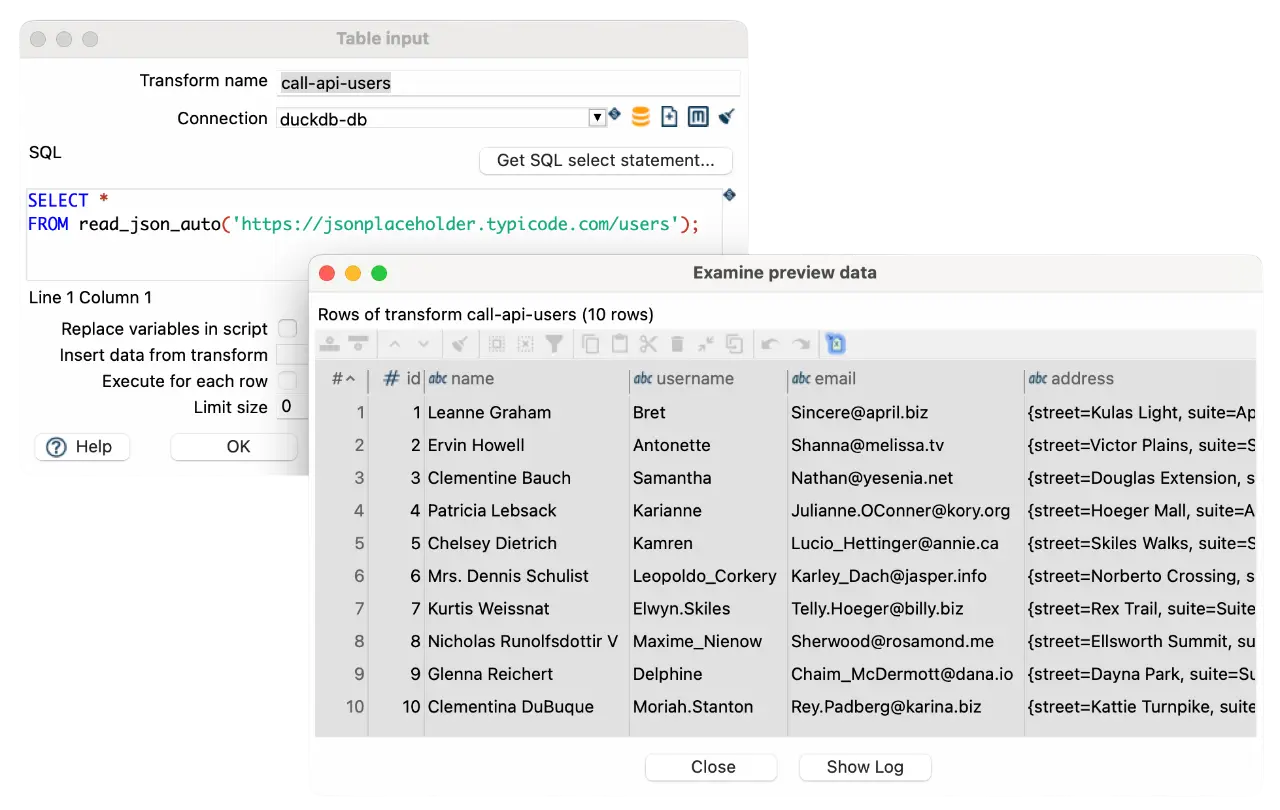

Example 2: Live metadata enrichment from a public API

Observability doesn’t have to stop at static files. Using DuckDB, you can query remote JSON APIs as if they were local tables, enabling real-time enrichment of metadata with external context.

Here’s a working example using the JSONPlaceholder API, which returns a structured list of users:

SELECT *

FROM read_json_auto('https://jsonplaceholder.typicode.com/users');

This produces a table of mock user metadata: names, emails, cities, even geo-coordinates.

You can read this data directly using an Input Table transform in Apache Hop with a configured DuckDB connection.

Nested JSON structures can also be flattened easily:

SELECT id, name, email, address.city, address.geo.lat AS lat

FROM read_json_auto('https://jsonplaceholder.typicode.com/users');

The result is live, queryable context, from a real sample API, embedded directly into your pipeline analytics.

You don’t need to focus on the content of this particular API. The real takeaway is that you can use DuckDB’s JSON functions inside Hop to connect to any API that returns structured metadata. For example, you can use live API responses to:

- Validate naming conventions or ownership metadata across systems

- Enrich internal metadata with attributes from external registries (e.g., team info, tags, access levels)

- Detect mismatches between pipeline configurations and centralized metadata services (e.g., field names, descriptions, responsible teams)

- And much more...

Use cases this enables

Combining DuckDB and Hop unlocks a new class of lightweight governance, documentation, and observability workflows:

Self-service observability

Self-service observability becomes possible when metadata is queryable, explainable, and auditable by design. This approach supports:

- Decentralized governance: Empower teams to audit their own projects

- Shift-left validation: Catch metadata issues during development, not after deployment

- Governance-as-code: Replace manual reviews with automated checks

- AI and LLM readiness: Structured JSON and SQL are ideal inputs for metadata embeddings or vector search

From passive to proactive metadata

This approach turns Apache Hop into more than an orchestration tool, it becomes a metadata-aware platform. By embedding DuckDB inside your pipelines, you gain visibility into your pipeline ecosystem without depending on external catalogs or heavyweight governance layers.

The end result is simple: pipelines that explain themselves, governance that runs automatically, and metadata that becomes actionable.

Looking for reliable support to power your data projects?

Our enterprise team is here to help you get the most out of Apache Hop. Let’s connect and explore how we can support your success.