TL;DR: Hop + Airflow 3.0 = CI/CD-Native, Container-First DataOps

If Apache Hop is how your team defines reusable data logic, then Airflow 3.0 is how you deploy, scale, and monitor that logic across environments, without friction.

- Need local dev? docker compose up

- Need prod-ready orchestration? Push to a registry and deploy on K8s

- Need runtime flexibility? Use Hop inside DockerOperator or KubernetesPodOperator

- Need observability? Airflow 3.0 integrates with OpenLineage out of the box

The future of data orchestration isn’t shell scripts or monolithic ETL. It’s low-code pipelines/workflows + container-native runtimes + declarative DAGs.

And it starts here:

curl -LfO 'https://airflow.apache.org/docs/apache-airflow/3.0.2/docker-compose.yaml' docker compose up

Apache Hop + Airflow 3.0: Container-native orchestration

Apache Hop makes low-code pipeline/workflows development accessible. Apache Airflow 3.0 brings orchestration into the container-native era. Together, they redefine how modern data teams prototype, scale, and ship. Together, they unlock:

- Composable DAGs built on reusable Hop pipelines

- Zero-touch deployment using Docker Compose, Kubernetes, or any container runtime

- Versioned pipeline execution with DAG reproducibility baked in

- Plug-and-play CI/CD support with container-native builds

- Multi-tenant, team-ready orchestration at enterprise scale

If you're building pipelines for analytics, ML, or real-time ingestion, this combo gives you clean separation of concerns: Hop is for design; Airflow is for runtime orchestration.

Here’s how you bootstrap a fully operational, Airflow 3.0 instance for testing purposes:

curl -LfO 'https://airflow.apache.org/docs/apache-airflow/3.0.2/docker-compose.yaml' mkdir -p ./dags ./logs ./plugins ./config echo -e "AIRFLOW_UID=$(id -u)" > .env docker compose run airflow-cli airflow config list docker compose up airflow-init docker compose up

Want more context? Check the official Airflow Docker Compose guide here: https://airflow.apache.org/docs/apache-airflow/stable/howto/docker-compose/index.html

Airflow 3.0: Not just an upgrade but a platform leap

Why is this release such a big deal for Hop users?

- Native async DAG execution – Lower latency, higher throughput

- TaskFlow 2.0 – Cleaner, more Pythonic DAG syntax

- Scheduler-managed backfills – Historical reprocessing made simple

- Container-first operators – Docker and Kubernetes as first-class citizens

- Plugin system overhaul – Easier to build, scale, and monitor custom integrations

- React + FastAPI core – Snappier UI and API extensibility

- DAG versioning + isolation – A must-have for reproducibility and compliance

Pattern: Hop + Airflow 3.0 + Docker = Declarative, Deployable, Scalable

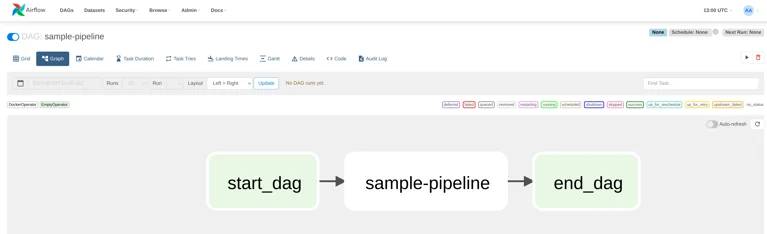

To integrate Apache Hop with Apache Airflow, you can use the DockerOperator to orchestrate Apache Hop pipelines and workflows within a DAG. This pattern allows for low-code/no-code data processes from Hop to be triggered and monitored within the broader orchestration capabilities of Airflow.

The typical pattern involves running a short-lived container with Apache Hop inside Airflow using Docker. Here's how this pattern is implemented:

- Airflow Setup: Run Apache Airflow using Docker Compose for simplicity. Disable default sample DAGs and mount the Docker socket to allow Airflow to control containers.

- DAG Creation: Define a DAG file in Python. The DAG uses DockerOperator to launch a containerized instance of Apache Hop.

from airflow.operators.docker_operator import DockerOperator

You build Hop pipelines as reusable artifacts. Then you orchestrate them like this:

from datetime import datetime, timedelta

from airflow import DAG

from airflow.operators.bash import BashOperator

from airflow.providers.docker.operators.docker import DockerOperator

from airflow.operators.python import BranchPythonOperator

from airflow.operators.empty import EmptyOperator

from docker.types import Mount

default_args = {

'owner' : 'airflow',

'description' : 'sample-pipeline',

'depend_on_past' : False,

'start_date' : datetime(2022, 1, 1),

'email_on_failure' : False,

'email_on_retry' : False,

'retries' : 1,

'retry_delay' : timedelta(minutes=5)

}

with DAG('sample-pipeline', default_args=default_args, catchup=False, is_paused_upon_creation=False) as dag:

start_dag = EmptyOperator(

task_id='start_dag'

)

end_dag = EmptyOperator(

task_id='end_dag'

)

hop = DockerOperator(

task_id='sample-pipeline',

# use the Apache Hop Docker image. Add your tags here in the default apache/hop: syntax

image='apache/hop:Development',

api_version='auto',

# auto_remove=True,

environment= {

'HOP_RUN_PARAMETERS': 'INPUT_DIR=',

'HOP_LOG_LEVEL': 'Basic',

'HOP_FILE_PATH': '${PROJECT_HOME}/transforms/null-if-basic.hpl',

'HOP_PROJECT_DIRECTORY': '/project',

'HOP_PROJECT_NAME': 'hop-airflow-sample',

'HOP_ENVIRONMENT_NAME': 'env-hop-airflow-sample.json',

'HOP_ENVIRONMENT_CONFIG_FILE_NAME_PATHS': '/project-config/env-hop-airflow-sample.json',

'HOP_RUN_CONFIG': 'local'

},

docker_url="unix://var/run/docker.sock",

network_mode="bridge",

mounts=[Mount(source='/Users/bart/projects/tech/apache-airflow/3.x/samples', target='/project', type='bind'), Mount(source='/Users/bart/projects/tech/apache-airflow/3.x/samples-config/', target='/project-config', type='bind')],

force_pull=True

)

start_dag >> hop >> end_dag

- Mount Volumes: Mount your local project directory (with your Hop configuration, pipelines, workflows) and environment configuration folder into the container. This ensures Hop has access to all necessary files inside the Docker environment.

mounts=[Mount(source='LOCAL_PATH_TO_PROJECT_FOLDER', target='/project', type='bind'),

Mount(source='LOCAL_PATH_TO_ENV_FOLDER', target='/project-config', type='bind')],

-

Environment Variables: Configure environment variables to pass execution context to Hop. These include:

- HOP_FILE_PATH: Path to the Hop pipeline or workflow to execute.

- HOP_PROJECT_DIRECTORY, HOP_PROJECT_NAME: Define project context.

- HOP_ENVIRONMENT_NAME, HOP_ENVIRONMENT_CONFIG_FILE_NAME_PATHS: Specify environment configurations.

- HOP_RUN_PARAMETERS: Pass runtime parameters to the pipeline.

- HOP_RUN_CONFIG: Typically set to local for a standard local execution engine.

- Airflow DAG Folder: Place your DAG file in the dags/ folder. Airflow will automatically pick it up and list it in the UI.

-

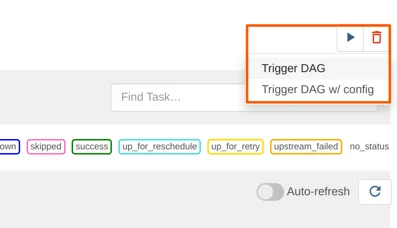

Running the DAG: Use the “Trigger DAG” button in Airflow to execute the Hop pipeline. Monitor execution logs and results through the Airflow UI.

- Parameterization: You can further parameterize your DAG to accept input parameters at runtime using Airflow's "Trigger DAG w/ config" feature. These parameters are passed to Hop pipelines via environment variables, enabling dynamic and reusable workflows.

- Advanced Use: For pipelines that depend on variables defined in environment files, ensure correct mounts and file paths. Use HOP_LOG_LEVEL=Detailed to expose full execution context and parameter values in the logs.

- Scheduling DAGs: While the previous examples involved manually triggering DAGs, Apache Airflow offers many built-in scheduling options. The most common approach is using a cron expression in the schedule_interval parameter of your DAG definition. For instance, to run a DAG every day at 10:00 AM, you would set schedule_interval='0 10 * * *' in the with DAG(...) block. Airflow will then handle automatic execution based on that schedule. Although DAG scheduling isn't specific to Apache Hop, it's important for operationalizing your data pipelines.

with DAG(

'sample-pipeline',

default_args=default_args,

schedule_interval='0 10 * * *',

catchup=False,

is_paused_upon_creation=False

) as dag:

This pattern enables citizen data engineers to integrate Apache Hop’s pipeline execution into a broader orchestration framework, taking advantage of Airflow’s scheduling, monitoring, and workflow dependencies without needing deep coding skills.

Looking for reliable support to power your data projects?

Our enterprise team is here to help you get the most out of Apache Hop. Let’s connect and explore how we can support your success.