In a world where organizations generate content at breakneck speed, the ability to transform that content into structured, meaningful insights is becoming a critical part of digital operations. At know.bi, we’ve been exploring how Apache Hop can help bridge the gap between raw digital content and the structured intelligence needed for better decisions.

From blog posts to business value

Publishing content is no longer the end goal, it's just the beginning. Blog articles, how-to guides, and knowledge base entries contain valuable signals that can power SEO, personalization, internal search, reporting, and even future content planning.

But the challenge lies in the format. Most content lives in complex HTML pages, often surrounded by navigation, ads, and styling code. It’s not built for analysis. Trying to manually extract and summarize hundreds of articles isn’t just inefficient, it’s unsustainable as content libraries grow.

What we need is a way to automate the transformation of these long-form pieces into clean, structured summaries that can feed into downstream systems.

Structured orchestration meets intelligent summarization

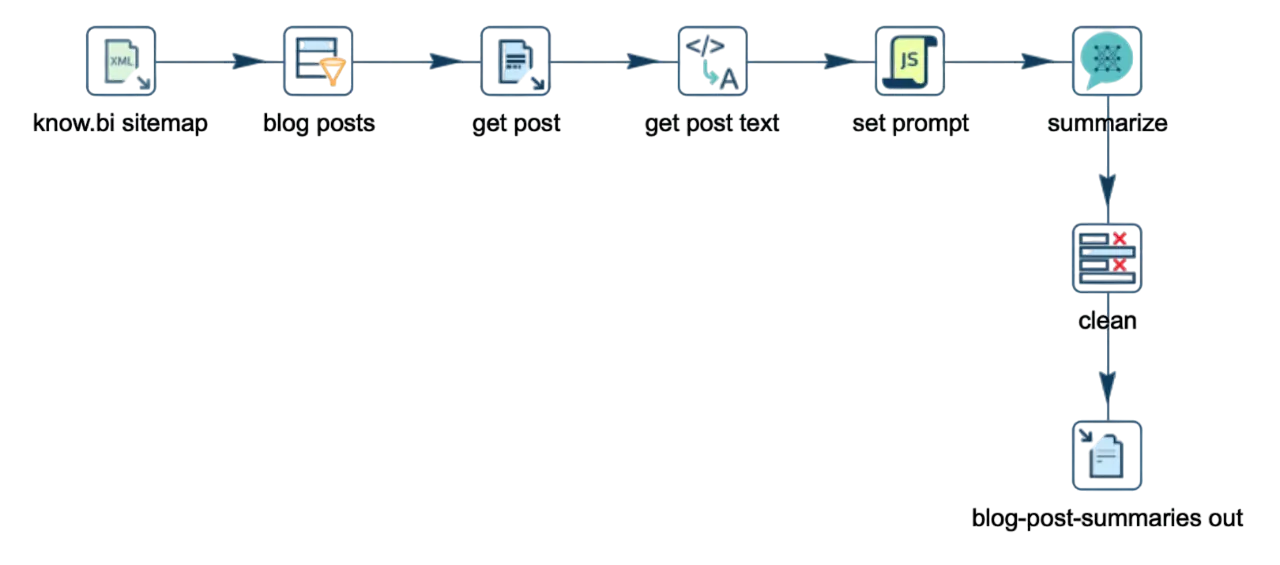

Using Apache Hop, we’ve built a content pipeline that automates the journey from raw blog content to actionable summaries. While large language models (LLMs) are part of the solution, the real engine behind it all is Hop, providing the data plumbing, automation logic, and modular control required to make this system both reliable and extensible.

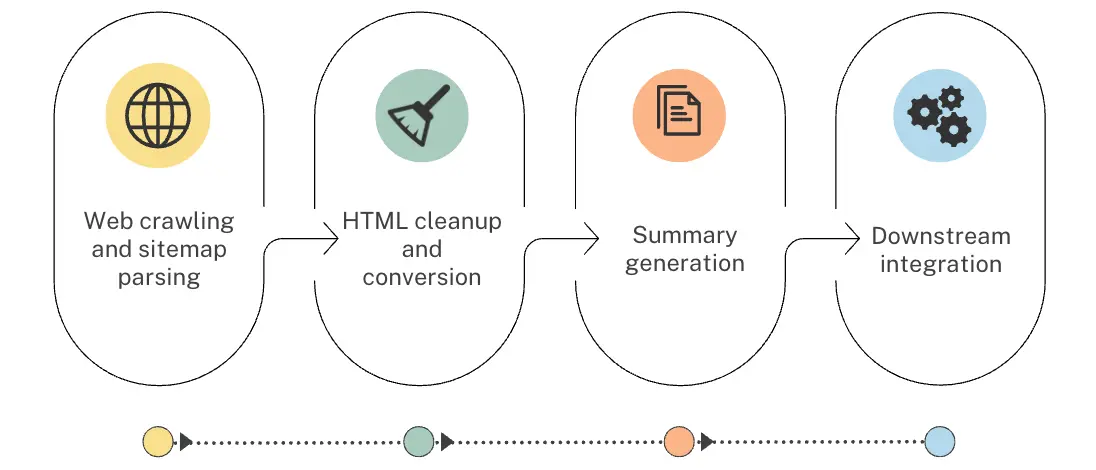

Here’s a high-level overview of how it works:

- Web crawling and sitemap parsing: We begin by collecting a structured list of content directly from our own website, using sitemap definitions to target relevant pages.

- HTML cleanup and conversion: Each article is extracted and converted from raw HTML into readable plain text, stripping out noise while preserving structure.

- Summary generation: Once clean text is available, we generate an LLM prompt to summarize the text. The prompts in this example are quite basic, but Apache Hop has everything you need to build complex prompts. The model itself is interchangeable depending on the requirements, the Language Model Chat transform offers a wide range of options.

- Downstream integration: The final summaries are made available for multiple uses, metadata enrichment, content recommendations, SEO audits, and internal analytics.

Apache Hop’s role in this process is central. It handles the pipeline definition, manages execution, and provides a clean way to integrate LLMs in your data pipelines with full transparency, making the entire process traceable, debuggable, and easy to scale.

Looking for reliable support to power your data projects?

Our enterprise team is here to help you get the most out of Apache Hop. Let’s connect and explore how we can support your success.